Undergraduate Researcher

FMI Basel • 2025 - present

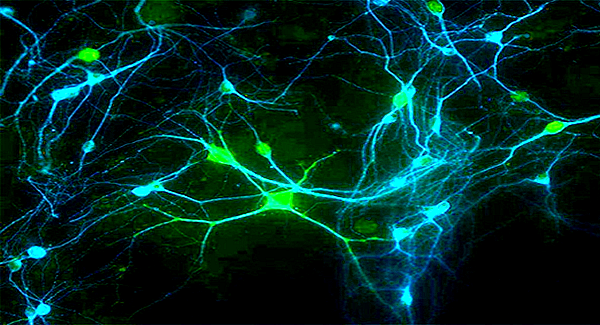

Conducted research in computational neuroscience, In the beautiful frame of Novartis Campus, focusing on efficiency in the field of Spiking Neural Networks. Collaborated with the amazing Zenke Lab to advance brain-inspired AI algorithms.

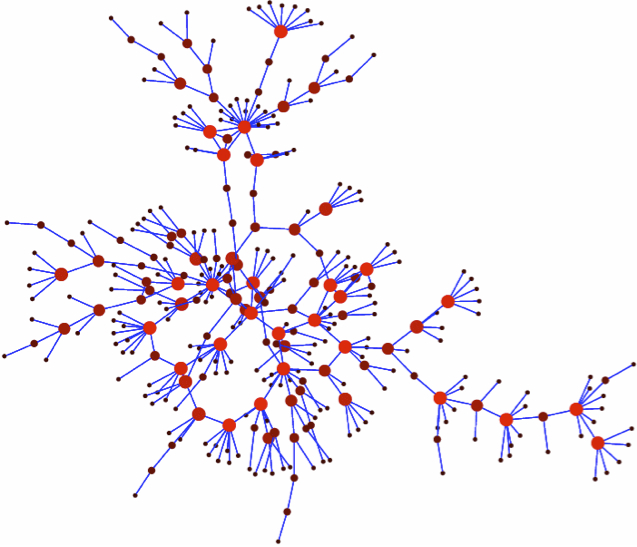

Here, with the guidance of Friedemann Zenke and Julia Gygax, along with the precious feedbacks from the rest of the Lab, I developed a novel neuron model (Hard Reset), which retains the same accuracy of LIF neuron, while improving computational efficiency (EFLOPs [1] ). Moreover I developed and tested a new fluctuation driven initialization [2] , to improve convergence speed and stability of HR neurons during training.

Novartis Campus FMI